Authors:

(1) Hamed Alimohammadzadeh, University of Southern California, Los Angeles, USA;

(2) Rohit Bernard, University of Southern California, Los Angeles, USA;

(3) Yang Chen, University of Southern California, Los Angeles, USA;

(4) Trung Phan, University of Southern California, Los Angeles, USA;

(5) Prashant Singh, University of Southern California, Los Angeles, USA;

(6) Shuqin Zhu, University of Southern California, Los Angeles, USA;

(7) Heather Culbertson, University of Southern California, Los Angeles, USA;

(8) Shahram Ghandeharizadeh, University of Southern California, Los Angeles, USA.

Table of Links

Conclusions and Current Efforts, Acknowledgments, and References

ABSTRACT

Today’s robotic laboratories for drones are housed in a large room. At times, they are the size of a warehouse. These spaces are typically equipped with permanent devices to localize the drones, e.g., Vicon Infrared cameras. Significant time is invested to fine-tune the localization apparatus to compute and control the position of the drones. One may use these laboratories to develop a 3D multimedia system with miniature sized drones configured with light sources. As an alternative, this brave new idea paper envisions shrinking these room-sized laboratories to the size of a cube or cuboid that sits on a desk and costs less than 10K dollars. The resulting Dronevision (DV) will be the size of a 1990s Television. In addition to light sources, its Flying Light Specks (FLSs) will be network-enabled drones with storage and processing capability to implement decentralized algorithms. The DV will include a localization technique to expedite development of 3D displays. It will act as a haptic interface for a user to interact with and manipulate the 3D virtual illuminations. It will empower an experimenter to design, implement, test, debug, and maintain software and hardware that realize novel algorithms in the comfort of their office without having to reserve a laboratory. In addition to enhancing productivity, it will improve safety of the experimenter by minimizing the likelihood of accidents. This paper introduces the concept of a DV, the research agenda one may pursue using this device, and our plans to realize one.

1 INTRODUCTION

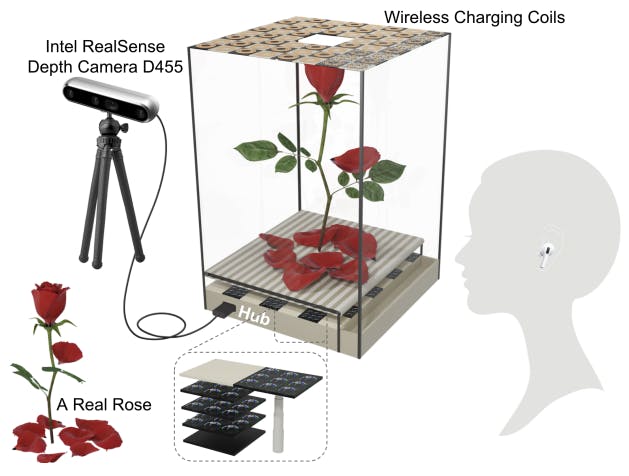

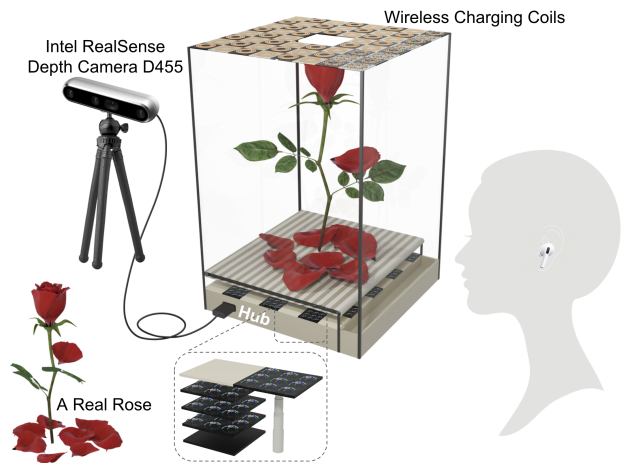

A Dronevision, DV, is a 3D display to design and implement next generation multimedia applications using Flying Light Specks, FLSs. An FLS is a miniature sized drone with one or more light sources with adjustable brightness [25–27]. A swarm of cooperating FLSs will render 3D shapes, point clouds, and animated sequences in a fixed volume, a 3D display. This Brave New Idea (BNI) envisions shrinking today’s room sized robotics laboratories to the size of a cuboid that fits on a user’s desk for multimedia content creation and rendering. Figure 1 shows a DV setup configured for visual display only. Four glass panes enhance the user’s safety by preventing rogue FLSs from coming to contact with a user and injuring them. Once the rendering of an illumination is verified to be safe, the user may remove the glass panes. The DV may illuminate the rose beyond the size of its base, see Figure 2. Moreover, a user may touch and interact with the illumination.

For 3D media content, a DV is a low cost alternative to an expensive robotics laboratory shared by different research groups. It will provide an experimenter with a dedicated 3D testbed to debug and evaluate their hardware and software solutions. It will enable a content creator to design and implement diverse multimedia applications that range from healthcare to entertainment. With healthcare, a designer may develop a 3D FLS display to illuminate 3D MRI scans of a patient and their organs, empowering a physician to separate the different organs (illuminations) and examine them in real time. With entertainment, the designer may illuminate characters of multi-player games such as Minecraft and Fortnite to come alive and interact with one another on a table top. In addition to improving productivity, a DV enhances the safety of both the experimenter and the content creator.

Figure 1 shows a DV displaying an illuminated rose with a falling petal; its original may be captured using an Intel RealSense depth camera[1]. The top panel of the DV consists of wireless charging stations. FLSs with a low remaining flight time fly through the square hole and land on a charging coil to charge their battery. Hundreds of FLSs may land on a charging coil and simultaneously charge their battery.

The floor of the DV consists of a Hub, Tiles, Terminus, and a conveyor belt. Each rod on the side acts as an elevator that delivers fully-charged FLSs to the base of the DV, where they are stored on the black tiles for future deployment.

The Hub is comparable to today’s servers and provides both wired and wireless connectivity to peripheral devices such as a depth camera and earbuds. Black and white tiles are modular pieces that can be plugged into other tiles to construct DVs of different lengths and depths. Black tiles serve both as a hangar and a dispatcher of FLSs, which are moved up and down using an elevator, and white tiles are rigid pieces that keep the floor together. Black tiles containing FLSs may be stacked below the white tiles, see Figure 1. When an empty black tile is pushed below a white tile, a black tile with FLSs is pushed onto the elevator to bring its FLSs to the floor of the DV; these FLSs are then dispatched to illuminate virtual objects.

Failed FLSs will fall onto a conveyor belt, which is a garbage collector that deposits failed FLSs to a Terminus. The Terminus is an empty row on the DV floor, e.g., the row closest to the user (the back panel) in Figure 1 (2). The conveyor belt protects FLSs sitting on black tiles from falling FLSs that have failed.

The Hub stops the conveyor belt before deploying one or more FLSs atop a black tile. A deployed FLS flies below the conveyor belt and past the Terminus to navigate to its coordinates in the DV’s display volume. Stopping the conveyor belt minimizes the likelihood of a failed FLS hitting and damaging a deployed FLS. Similarly, by placing the charging stations at the top of the DV, the likelihood of failed FLSs hitting and damaging a functional FLS that is in transit to a charging station is minimized.

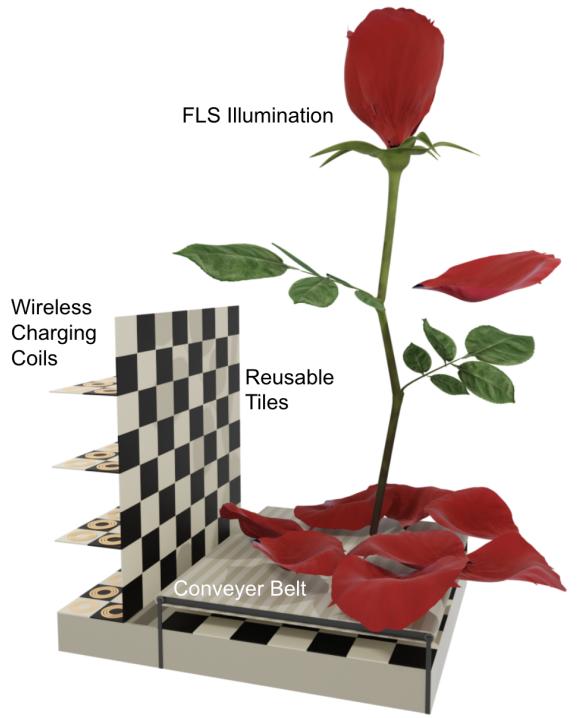

A DV will be both modular and configurable. Once an experimenter is satisfied with the safety of their setup in Figure 1, they may remove the glass panes and place the top as a back panel in front of the Terminus, enabling the DV to render illuminations that extend beyond the length and width of its base and allowing the user to physically interact with the illuminations (Figure 2). The haptic feedback provided by the illumination will include a combination of kinesthetic and tactile sensations. The kinesthetic (force) feedback will enable a user to experience the shape and hardness of an illuminated object. For example, a user may grasp a rose petal by its sides using their fingers. The user may press their fingers together to bend the rose petal to experience its stiffness. The tactile (skin-based) feedback will enable a user to experience the object’s surface properties. For example, FLSs with electrotactile actuators may provide the sharp sensation a user would expect when running their finger over a thorn on the virtual rose of Figure 2.

The contributions of this paper include:

• DV as an experimental 3D platform to design and implement next generation multimedia applications using FLSs (Sections 1 and 2).

• Use of DV for haptic interactions (Section 3).

• Two multimedia systems research challenges raised by a DV (Section 4).

We present related work in Section 5 and brief conclusions and our current efforts to realize a DV in Section 6.

This paper is available on arxiv under CC 4.0 license.

[1] The use of a camera is for illustration purposes only. The shown rose is a mesh file consisting of 65K points created using Blender [3, 26].